Architecture

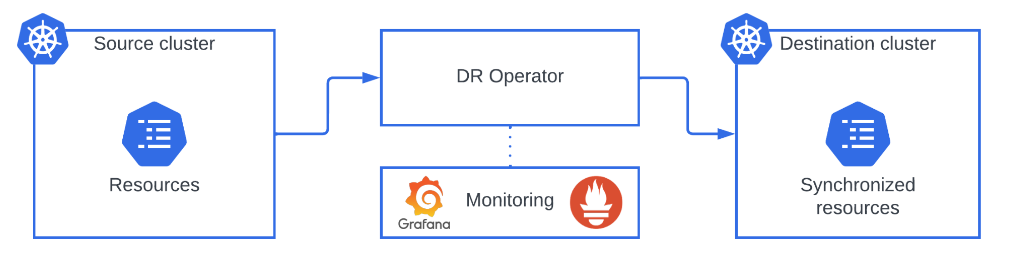

The cluster is protected with a warm stand-by paired cluster where the workloads will be offloaded when the disaster occurs. The resources can be deactivated while in the destination cluster until such event takes place, avoiding unnecessary resource consumption and optimizing organizational costs.

Resiliency Operator extracts the resources from the source cluster and syncs them on the destination cluster maintaining a consistent state between them.

Operator monitoring is attached to the operator and it is independent of either cluster.

The operator can be deployed in either a 2-clusters or 3-clusters architecture.

2-clusters

This configuration is recommended for training, testing, validation or when the 3-clusters option is not optimal or possible.

The currently active cluster will be the source cluster, while the passive is the destination cluster. The operator, including all the Custom Resource Definitions (CRD) and processes, is installed in the latter. The operator will listen for new resources that fulfill the requirements and clone them into the destination cluster.

The source cluster is never aware of the destination cluster and can exist and operate as normal without its presence. The destination cluster needs to have access to it through a KubernetesCluster resource.

3-clusters

In addition of the already existing 2 clusters, this modality includes the management cluster. The operator synchronization workflow is delegated in it instead of depending on the destination cluster. The management cluster is in charge of reading the changes and new resources in the source cluster and syncing them to the destination. Neither source or destination cluster needs to know of the existence of the management cluster and can operate without it. Having a separate cluster that is decoupled from direct production activity lowers operational risks and eases access control to both human and software operators. The operator needs to be installed in the destination cluster as well to start the recovery process without depending on other clusters. Custom Resources that configure the synchronization are deployed in the management cluster while those only relevant when executing the recovery process are deployed in the destination cluster.

This structure fits organizations that are already depending on a management cluster for other tasks or ones that are planning to do so. Resiliency Operator does not require a standalone management cluster and can be installed and managed from an existing one.

Components

Synchronization across clusters is managed through Kubesync, Astronetes solution for Kubernetes cluster replication. The following components are deployed when synchronization between two clusters is started:

| Component | Description | Source cluster permissions | Destination cluster permissions |

|---|---|---|---|

| Events listener | Read events in the source cluster. | Cluster reader | N/A |

| Processor | Filter and transform the objects read from the source cluster. | Cluster reader | N/A |

| Synchronizer | Write processed objects in the destination cluster. | N/A | Write |

| Reconciler | Sends delete events whenever it founds discrepancies between source and destination. | Cluster reader | Cluster reader |

| NATS | Used by other components to send and receive data. | N/A | N/A |

| Redis | Stores metadata about the synchronization state. Most LiveSynchronization components interact with it. | N/A | N/A |

| Metrics exporter | Export metrics about the LiveSynchronization status. | N/A | N/A |